Pentagon reaches important waypoint in long journey toward adopting ‘responsible AI’

There’s a lot to unpack in the Pentagon’s new high-level plan of action to ensure all artificial intelligence use under its purview abides by U.S. ethical standards. Experts are weighing in on what the document means for the military’s pursuit of this crucial technology.

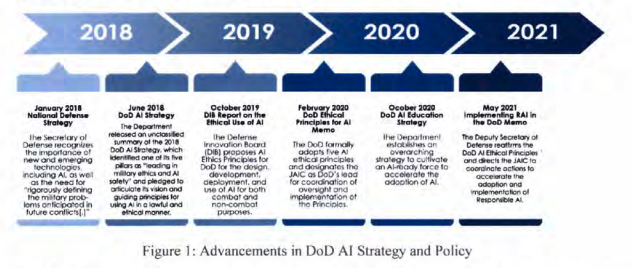

In many ways, the Responsible AI Strategy and Implementation Pathway, released last week, marks the culmination of years of work in the Defense Department to drive the adoption of such capabilities. At the same time, it’s also an early waypoint on the department’s long and ongoing journey that sets the tone for how key defense players will help safely implement and operationalize AI, while racing against competitors with less cautious approaches.

“As a nation, we’re never going to field a system quickly at the cost of ensuring that it’s safe, that it’s secure, and that it’s effective,” DOD’s Chief for AI Assurance Jane Pinelis said at a recent Center for Strategic and International Studies event.

“Implementing these responsible AI guidelines is actually an asymmetric advantage over our adversaries, and I would argue that we don’t need to be the fastest, we [just] need to be fast enough. And we need to be better,” she added.

The term “AI” generally refers to a blossoming branch of computer science involving systems capable of performing complex tasks that typically require some human intelligence. The technology has been widely adopted in society, underpinning maps and navigation apps, facial recognition, chatbots, social media monitoring, and more. And Pentagon officials have increasingly prioritized procuring and developing AI for specific mission needs in recent years.

“Over the coming decades, AI will play a role in nearly all DOD technology, just as computers do today,” Gregory Allen, AI Governance Project director and Strategic Technologies Program senior fellow at CSIS, told FedScoop. “I think this is the right next step.”

The Pentagon’s new 47-page responsible AI implementation plan will inform its work to sort through the incredibly thorny known and unknown issues that could come with fully integrating intelligent machines into military operations. FedScoop spoke with more than a half dozen experts and current and former DOD officials to discuss the nuances within this foundational policy document and their takeaways about the road ahead.

“I’ll be interested in how this pathway plays out in practice,” Megan Lamberth, associate fellow in the Center for a New American Security’s Technology and National Security Program, noted in an interview.

“Considering this implementation pathway as a next step in the Department’s RAI process — and not the end — then I think [its] lines of effort begin to provide some specificity to the department’s AI approach,” she said. “There’s more of an understanding of which offices in the Pentagon have the most skin in the game right now.”

Principles alone are not enough

Following leadership mandates and consultations with a number of leading AI professionals over many months, the Pentagon officially issued a series of five ethical principles to govern its use of the emerging technology in early 2020. At the time, the U.S. military was the first in the world to adopt such AI ethics standards, according to Pinelis.

A little over a year later, the department reaffirmed its commitment to them and released corresponding tenets that serve as priority areas to shape how the department approaches and frames AI. Now, each of those six tenets — governance, warfighter trust, product and acquisition, requirements validation, the responsible AI ecosystem, and workforce — have been fleshed out with detailed goals, lines of effort, responsible components and estimated timelines via the new strategy and implementation plan.

“Principles alone are not enough when it comes to getting senior leaders, developers, field officers and other DOD staff on the same page,” Kim Crider, managing director at Deloitte, who leads the consulting firm’s AI innovation for national security team, told FedScoop. “Meaningful governance of AI must be clearly defined via tangible ethical guidance, testing standards, accountability checks, human systems integration and safety considerations.”

Crider, a retired major general who served 35 years in the military, was formerly the chief innovation and technology officer for the Space Force and the chief data officer for the Air Force. In her view, “the AI pathway released last week appears to offer robust focus and clarity on DOD’s proposed governance structure, oversight and accountability mechanisms,” and marks “a significant step toward putting responsible AI principles into practice.”

“It will be interesting to see the DOD continue to explore and execute these six tenets as new questions concerning responsible AI implementation naturally arise,” she added.

The pathway’s rollout comes on the heels of a significant bureaucratic shakeup that merged several of DOD’s technology-focused components — Advana, Office of the Chief Data Officer, Defense Digital Service, and Joint Artificial Intelligence Center (JAIC) — under the nascent Chief Digital and Artificial Intelligence Office (CDAO).

David Spirk, the Pentagon’s former chief data officer who helped inform the CDAO’s establishment, said this pathway’s “emphasis on modest centralization of testing capability and leadership with decentralized execution” is an “indication of the maturity of thought in how the Office of the Secretary of Defense is positioning the CDAO to drive the initiatives successfully into the future when they will be even more important.”

It’s “a clear demonstration of the DOD’s intent to lead the way for anyone considering high consequence AI employment,” Spirk, who is now a special adviser for CalypsoAI, told FedScoop.

Prior to joining CSIS, Allen was Spirk’s colleague in DOD — serving the JAIC’s director of strategy and policy — where he, too, was heavily involved in guiding the enterprise’s early pursuits with AI. Even where the new pathway’s inclusions seem modest, in his view, “they are actually quite ambitious.”

“The DOD includes millions of people performing hundreds of billions of dollars’ worth of activity,” he said. “Developing a governance structure where leadership can know and show that all AI-related activities are being performed ethically and responsibly, including in situations with life-and-death stakes, is no easy task.”

Beyond clarifying how the Pentagon’s leadership will “know and show” that their strategy is being implemented as envisioned, other experts noted how the pathway provides additional context and distinctions for programs, offices and industry partners to guide their planning in their connected paths toward robust RAI frameworks.

“Perhaps most importantly, the document provides additional structure and nomenclature that industry can utilize in collaboration activities, which will ultimately be required to achieve scale,” Booz Allen Hamilton’s Executive Vice President for AI Steve Escaravage, an early advocate in RAI, told FedScoop.

“I view it as industry’s responsibility to provide the department insights on the next layer of standards and practices to assist the department’s efforts,” he said.

A journey toward ‘trust’

The Pentagon’s “desired end-state for RAI is trust,” officials wrote in the new pathway.

Though a clear DOD-aligned definition of the term isn’t included, “trust” is mentioned dozens of times throughout the new plan.

“In AI assurance, we try not to use the word ‘trust,’” Pinelis told FedScoop. “If you look up ‘trust,’ it has something like 300 definitions, and most of them are very qualitative — and we’re trying to get to a place that’s very objective.”

In her field, experts use the term “justified confidence,” which is considered evidence-based, more well-defined, and embraces testing and metrics to back it up.

“But of course, in some of the like softer kind of sciences and software language, you will see ‘trust,’ and we try to reserve it either for kind of warfighter trust in their equipment, which manifests in reliance — like literally will the person use it — and that’s how I kind of measure it very tangibly. And then we also use it kind of in a societal context of like our international allies trusting that we won’t field weapons or systems that are going to cause fratricide or something along those lines,” Pinelis explained.

While complicated by the limits of language, this overarching approach is meant to help diverse Pentagon AI users have justifiable and appropriate levels of trust in all systems they lean on, which would in turn help accelerate adoption.

“AI only gets employed in production if the senior decision-makers, operators, and analysts at echelon have the confidence it works and will remain effective regardless of mission, time and place,” Spirk noted. During his time at the Pentagon, he came to recognize that “trust in AI is and will increasingly be a cultural challenge until it’s simply a norm — but that takes time.”

Spirk and the majority of other officials who FedScoop spoke to highlighted the significance of the new responsibilities laid out in the goals and lines of efforts for the second tenet in the pathway, which is warfighter trust. Through them, DOD commits to a robust focus on independent testing and validation — including new training for staff, real-time monitoring, harnessing commercially available technologies and more.

“This is one of the most important steps in making sure that [the Office of the Secretary of Defense] is setting conditions to provide the decision advantage the department and its allies and partners need to outpace our competitors at the speed of the best available commercial compute, whether cloud-based or operating in a disadvantaged and/or disconnected comms environment,” Spirk said.

Allen also noted that tasks under that second tenet “are big.”

“One of the key challenges in accelerating the adoption of AI for DOD is that there generally aren’t mature testing procedures that allow DOD organizations to prove that new AI systems meet required standards for mission critical and safety critical functions,” he explained. By investing now in maturing the AI test and evaluation ecosystem, DOD can prevent a future process bottleneck where promising AI systems in development can’t be operationally fielded because there is not enough capacity.

“Achieving trust in AI is a continuous effort, and we see a real understanding of this approach throughout the entire plan,” Deloitte’s Crider said. She and Lamberth both commended the pathway’s push for flexibility and an iterative approach.

“I like that the department is recognizing that emerging and future applications of AI may require an updated pathway or different kinds of oversight,” Lamberth noted.

In her view, all the categories under each line of effort “cover quite a bit of ground.”

One calls for the creation of a DOD-wide central repository of “exemplary AI use cases,” for instance. Others concentrate on procurement processes and system lifecycles, as well as what Lamberth deemed “much-needed talent” initiatives, like developing a mechanism to identify and track AI expertise and completely staffing the CDAO.

“While all the lines of effort listed in the pathway are important to tackle, the ones that stick out to me are the ones that call for formal activation of key processes to drive change across the organization,” Crider said.

She pointed to “the call for best practices to incorporate operator input and system feedback throughout the AI lifecycle, the focus on developing responsible AI-related acquisition resources and tools, the use of a Joint Requirements Oversight Council Memorandum (JROC-M) to drive changes in requirement-setting processes and the development of a legislative strategy to ensure appropriate engagement, messaging and advocacy to Congress,” in particular.

“Each of these lines of effort is critical to the long-term success of the pathway because they help drive systemic change, emphasize the need for resources and reinforce the goals of the pathway at the highest levels of the organization,” she said.

A variety of assigned activities are also associated with what could soon be major military policy revamps.

For example, the department commits to addressing AI ethics in its upcoming update of the policy on autonomy in weapons systems, DOD directive 3000.09. And it calls for CDAO and other officials to explore whether a review procedure is needed to ensure the warfare capabilities will be consistent with the DOD’s ethics principles.

Spirk and other experts noted that such an assessment would be prudent for the Pentagon.

“As AI is developed and deployed across the department, it will reshape and improve our warfighting capabilities. Therefore, it is critical to consider how AI principles and governance align with overall military doctrine and operations,” Crider said.

Allen added that that line of effort demonstrates how the department recognizes that there are a lot of relevant existing DOD processes, such as weapons development legal reviews and existing safety standards, that apply to all systems — and not just AI-enabled ones.

“The DOD is still assessing whether the right approach is to consider a new, standalone review process focused on RAI or whether to update the existing processes. I’m strongly in favor of the latter approach,” he said. “DOD should build RAI into — not on top of — the existing institutions and processes that ensure lawful, safe and ethical behavior.”

Wait and see

It is undoubtedly difficult for large, complex bureaucratic organizations like the Pentagon to prioritize the implementation of tech-driving strategic plans while balancing other mission-critical work.

Experts who spoke to FedScoop generally agreed that by identifying specific alignment tasks with existing DOD directives and frameworks from other offices, and outlining who will carry them out, the implementation pathway ensures greater integration and some accountability for everyone to execute on.

Still, some concerns remain.

“Looking ahead, I think that many of the ambitions of the … pathway are in tension with the department’s technology infrastructure and security requirements. Creating shared repositories and workspaces requires the cloud, and it doesn’t work if data are siloed and access to open applications is restricted,” Melanie Sisson, a fellow in the Brookings Institution’s Center for Security, Strategy, and Technology, told FedScoop.

Spirk also noted that “a vulnerability in compressive oversight and leadership exists here, as the technical talent with domain expertise to understand how to both measure and overcome obstacles to gaps and weaknesses that will be illuminated will likely be significant.”

To address these and many other unforeseen concerns, DOD could potentially benefit from developing a feedback mechanism and working body among the individuals and teams tasked as operational responsible AI leads, some experts recommended.

“It is important to keep the lines of communication open — both horizontally and vertically. Challenges that may come up during the implementation phase at the team or project level may be common issues across DOD,” Crider said.

The impact of the implementation plan remains to be seen. And investments in people, power and dollars will be needed to effectively guide, drive, test, apply and integrate responsible AI across the enterprise.

But the officials FedScoop spoke to are mostly hopeful about what’s to come.

“Looking at the lines of effort and the offices responsible for each, it is clear the department has made strides in establishing offices and processes for responsible AI development and adoption. While a lot of hard work remains, the department continues to show that it is committed to AI,” Lamberth said.

“I’ll be interested to see how this guidance is communicated to the rest of the department,” she added. “How will it be communicated that these efforts are important across the services, and how will this pathway impact how the services develop and acquire potential AI technologies?”