- Sponsored

- AI

Government gears up to embrace generative AI

Artificial Intelligence (AI) is on the brink of transforming government agencies, promising to elevate citizen services and boost workforce productivity. As agencies gear up to integrate generative AI into their operations, understanding opportunities, concerns and sentiments becomes crucial for preparing to transition into this AI-driven era of government operations.

A new report, “Gauging the impact of Generative AI on Government,” finds that three-fourths of agency leaders polled said that their agencies have already begun establishing teams to assess the impact of generative AI and are planning to implement initial applications in the coming months.

Based on a new survey of 200 prequalified government program and operations executives and IT and security officials, the report identified critical issues and concerns executives face as they consider the adoption of generative AI in their agencies.

The report, produced by Scoop News Group for FedScoop and underwritten by Microsoft, is among the first government surveys to assess the perceptions and plans of federal and civilian agency leaders following a series of White House initiatives to develop safeguards for the responsible use of AI. The U.S. government recently disclosed that more than 700 AI uses cases are underway at federal agencies, according to a database maintained by AI.gov.

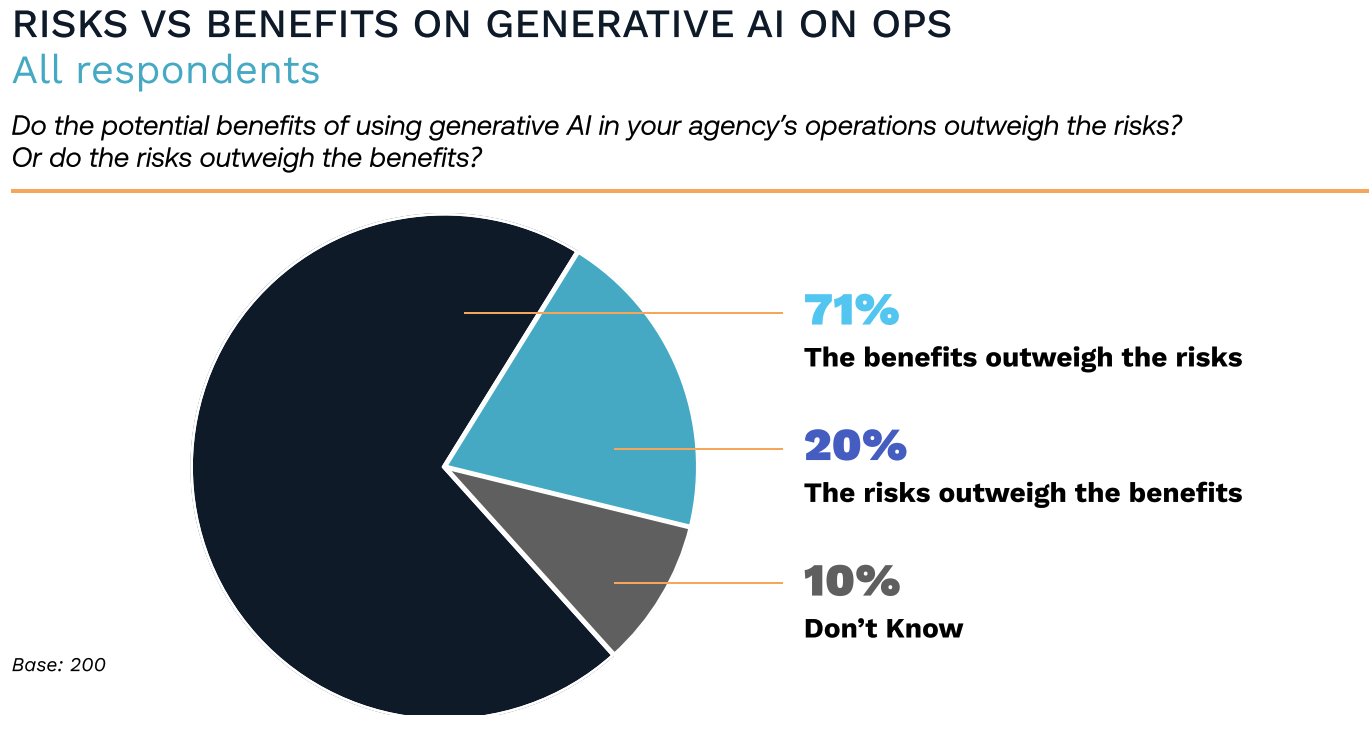

The findings show that a majority of respondents are actively exploring the application of generative AI. An overwhelming 84% of respondents indicated that their agency leadership considers understanding the impact of generative AI as a critical or important priority level for agency operations. Significantly, 71% believe that the potential advantages of employing generative AI in their agency’s operations outweigh the perceived risks.

However, the survey also highlights several areas of hesitation that warrant attention. The top concerns related to the risks of using generative AI include a lack of controls to ensure ethical/responsible information generation, a lack of ability to verify/explain generated output and potential abuse/distortion of government-generated content in the public domain.

These are unquestionably valid concerns that agencies must confront head-on. Encouragingly, many agencies are already taking proactive steps to address these challenges, with a significant proportion (71%) having established enterprise-level teams or offices devoted to developing AI policies and resources—a testament to their commitment to effective AI governance.

Impact on agency functions

Regardless of concerns, generative AI is poised to significantly impact various agency functions and use cases. A significant portion of agencies (nearly half) are gearing up to explore generative AI’s potential within the next 12 months.

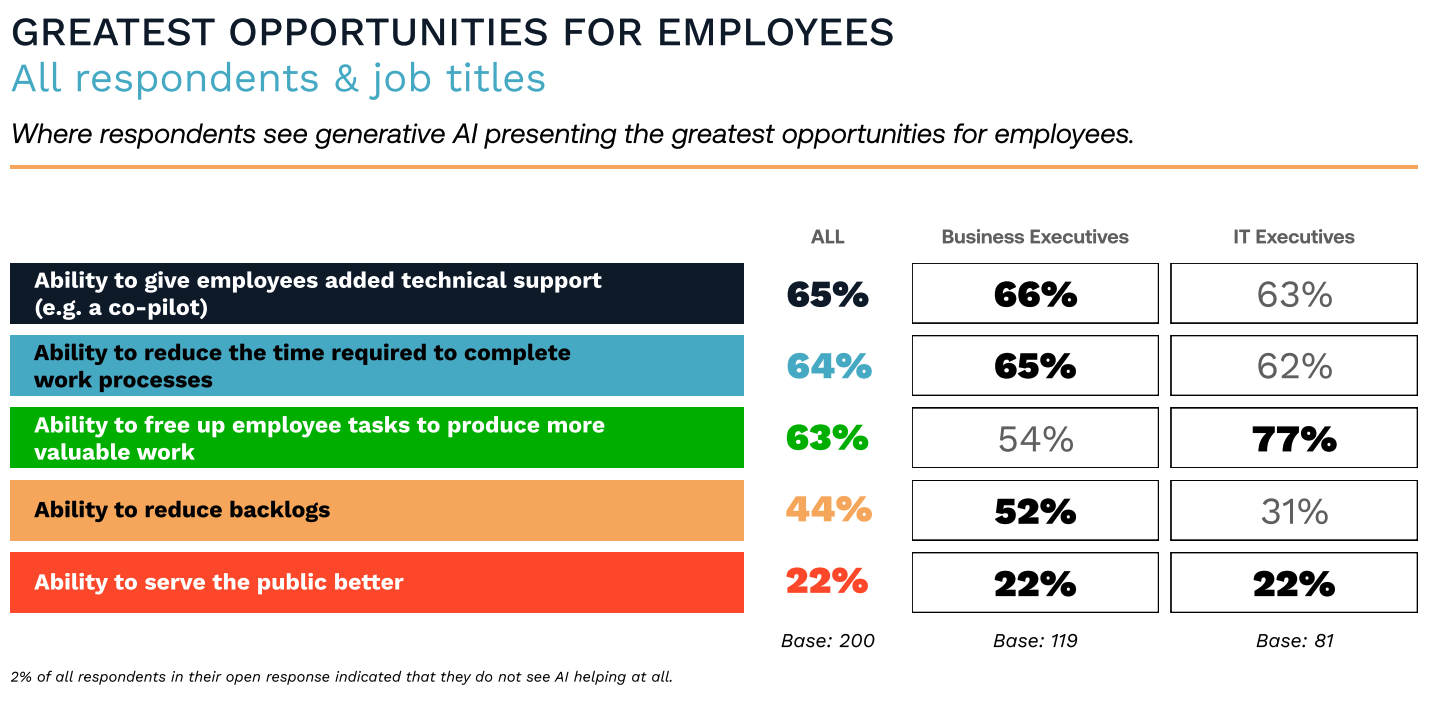

In particular, leaders are eyeing improvements in their business operations and workflows, with 38% expressing confidence that generative AI can enhance efficiency. Close to two-thirds of respondents said they believe generative AI was likely to give employees the ability to reduce the time required to complete work processes and free up time to produce more valuable work.

Another domain where generative AI is gaining traction is mission intelligence and execution. Over half of the respondents are either assessing or planning to evaluate its impact, with a similar percentage looking to implement AI to support mission activities. Confidence levels in generative AI’s ability to deliver value and cost savings in this realm are encouraging.

Interestingly, the survey underscores differing points of view about implementation priorities between business and IT executives. Roughly half of the agency business executives polled expect one or more generative AI applications will be rolled out in the next 6-12 months for data analytics, IT development/cybersecurity, and business operations. In contrast, roughly two-thirds of IT executives, presumably closer to implementation rhythms, see business operations getting new generative AI applications in the next 6-12 months, followed by case management cybersecurity use cases.

However, the findings suggest a deeper story about the need for training, according to Steve Faehl, federal security chief technology officer at Microsoft, after previewing the results. Half of all executives polled (and 64% of those at defense and intelligence agencies) cited a lack of employee training to use generative responsibly as a top concern but only 32% noted the need to develop employee training programs as a critical employee concern, suggesting a potential disconnect in how organizations view AI training.

“Effective training from industry could close one of the biggest risks identified by the U.S. government in support of responsible AI objectives and government employees,” he said. “Those who develop controls having an understanding of risk from their own use cases will create a fast path for controls for other use cases,” he said. As a best practice, “experimentation, planning, and policymaking should be iterative and progress hand-in-hand to inform policymakers of real-world versus perceived risks and benefits.”

Recommendations

As government agencies balance innovation and responsibility in serving the public’s interest, generative AI will require agency leaders to confront a new set of dynamics and begin recalibrating several strategic decisions in the near term, the study concludes. Among other conclusions from the findings, the study recommends agency executives prepare for a faster pace of change and establish flexible governance policies that can evolve as AI applications evolve.

It also recommends facilitating environments for experimentation. By allowing a broad range of employees to experience generative AI’s potential, agencies stand to learn faster and address lingering worries about job security and satisfaction.

In addition to prioritizing use cases, the study recommends that agency leaders devote greater attention to training employees to use generative AI responsibly and capitalize on emerging resources, including NIST’s AI Risk Management Framework and resources assembled by the National AI Initiative.

Download “Gauging the Impact of Generative AI on Government” for the detailed findings.

This article was produced by Scoop News Group for FedScoop and sponsored by Microsoft.